Author: Mel Borg | Posted On: 27 Aug 2025

Australia has taken a step forward in ensuring artificial intelligence is developed and deployed responsibly. The release of the Australian Responsible AI Index 2025, published by Fifth Quadrant and sponsored by the National AI Centre (NAIC), not only measures how organisations are progressing but also introduces a nation-wide Responsible AI Self-Assessment Tool.

This initiative comes at a time when AI is moving rapidly from experimentation to business-critical implementation. The Index provides a year-on-year benchmark of responsible AI maturity in Australia, while the new tool gives organisations a practical way to understand their current capabilities, benchmark against peers, and receive tailored guidance aligned with the Voluntary AI Safety Standard (VAISS).

Productivity gains, but a confidence gap

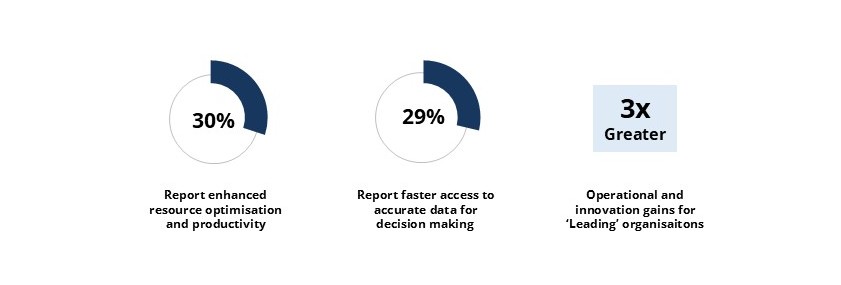

The 2025 Index shows that organisations are already realising measurable benefits from responsible AI adoption:

However, the findings reveal a concerning confidence–implementation gap. Many organisations express confidence in their responsible AI practices, but far fewer have put these into action. For example:

- 58% claim confidence in human oversight, yet only 23% have fully implemented it

- Similar gaps exist in testing, monitoring, and contestability

This disconnect highlights a risk as AI adoption scales, organisations may be overestimating their readiness, leaving gaps in governance and trust.

Small businesses falling behind

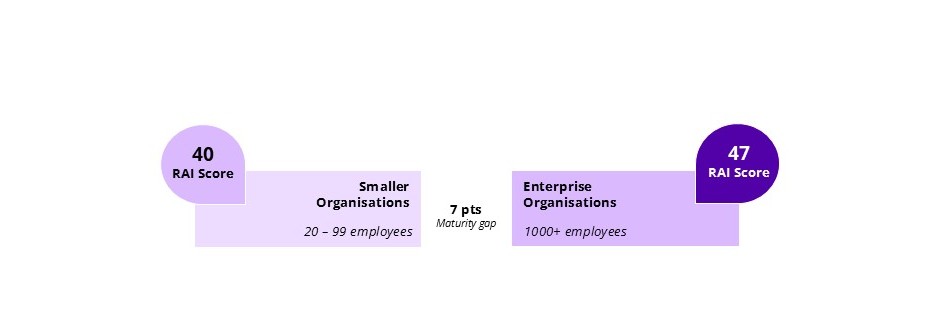

The Index highlights a growing divide between larger enterprises and smaller organisations. Only 9% of smaller businesses have reached the ‘Leading’ segment, compared to 21% of enterprises, while smaller firms score seven points lower in maturity, on average.

Given the central role small businesses play in the Australian economy, this maturity gap could limit the broader national benefits of responsible AI. The Index calls for simplified frameworks and targeted capability-building initiatives to ensure smaller businesses are not left behind.

Stronger safeguards in high-risk use cases

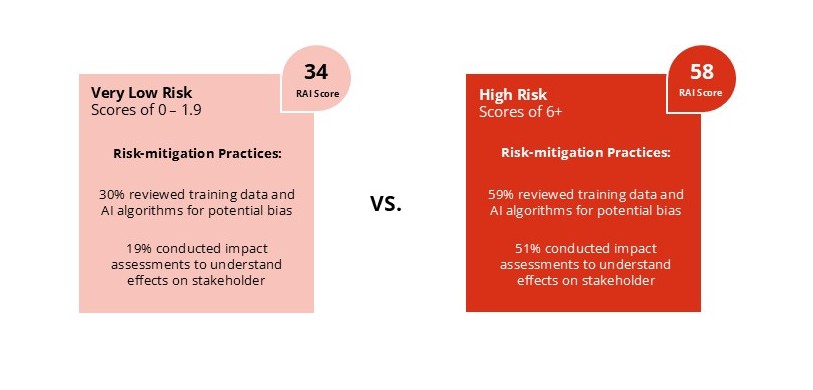

Interestingly, organisations deploying AI in high-risk contexts, such as recruitment or compliance, are demonstrating stronger governance. High-risk users score higher on maturity compared to very-low risk users and are implementing risk-mitigation strategies at a higher rate.

This suggests that when the stakes are higher, organisations are more likely to adopt robust responsible AI practices, which is an encouraging sign that governance frameworks can scale with risk.

The Responsible AI Self-Assessment Tool

At the heart of this year’s Index launch is the Responsible AI Self-Assessment Tool—Australia’s first national resource of its kind.

The tool enables organisations to:

- Measure their maturity against the Responsible AI Index framework

- Benchmark performance against peers across industries

- Receive tailored guidance on practical next steps

Bridging the gap

The Responsible AI Index 2025 sends a clear message: while progress is being made, there is still work to do. Productivity and efficiency gains show the tangible value of responsible AI, but the confidence–implementation gap and small business lag highlight the need for continued support.

Government and industry collaboration will be key to bridging this divide. The Index calls for promoting uptake of the Self-Assessment Tool, and ensuring frameworks remain accessible and practical.

Access the full Australian Responsible AI Index 2025 report and Self-Assessment Tool here

Click here to contact us about the Responsible AI Index and explore Fifth Quadrant’s latest research insights.

Posted in Uncategorized