Author: Dr Steve Nuttall | Posted On: 16 Nov 2023

Large language models (LLMs) are a type of Artificial Intelligence (AI) that can predict, generate and understand human language after being trained on large amounts of data. LLMs are still under development, but they have the potential to revolutionise many industries, including healthcare. By harnessing the capabilities of LLMs, healthcare providers can improve diagnostic accuracy, streamline record-keeping and enhance patient engagement. LLMs must also be used responsibly and have primary regard to patient confidentiality and privacy and not harm patients or the wider community.

In this blog post, we examine our latest survey of the use of LLMs by GPs and discuss the ethical issues with using LLMs in healthcare and safety considerations.

we surveyed 183 Australian GPs

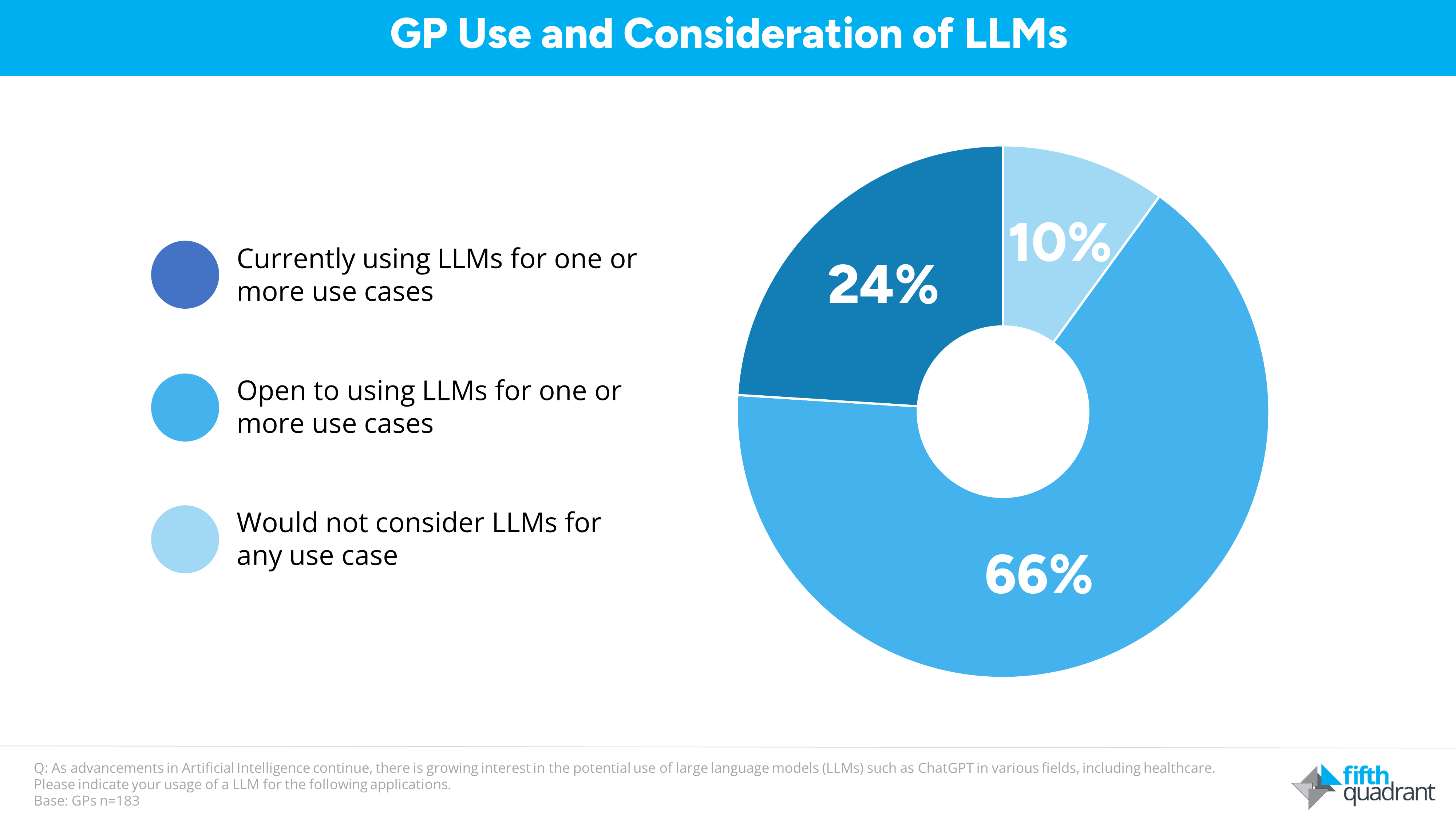

Our survey shows that most Australian GPs are open to using LLMs in their practice with only a small pocket of resistance. Nearly one in four GPs are already using LLMs and 66% are open to using LLMs. One in 10 said they would not consider using LLMs for any potential use case in their practice, who tend to be older GPs.

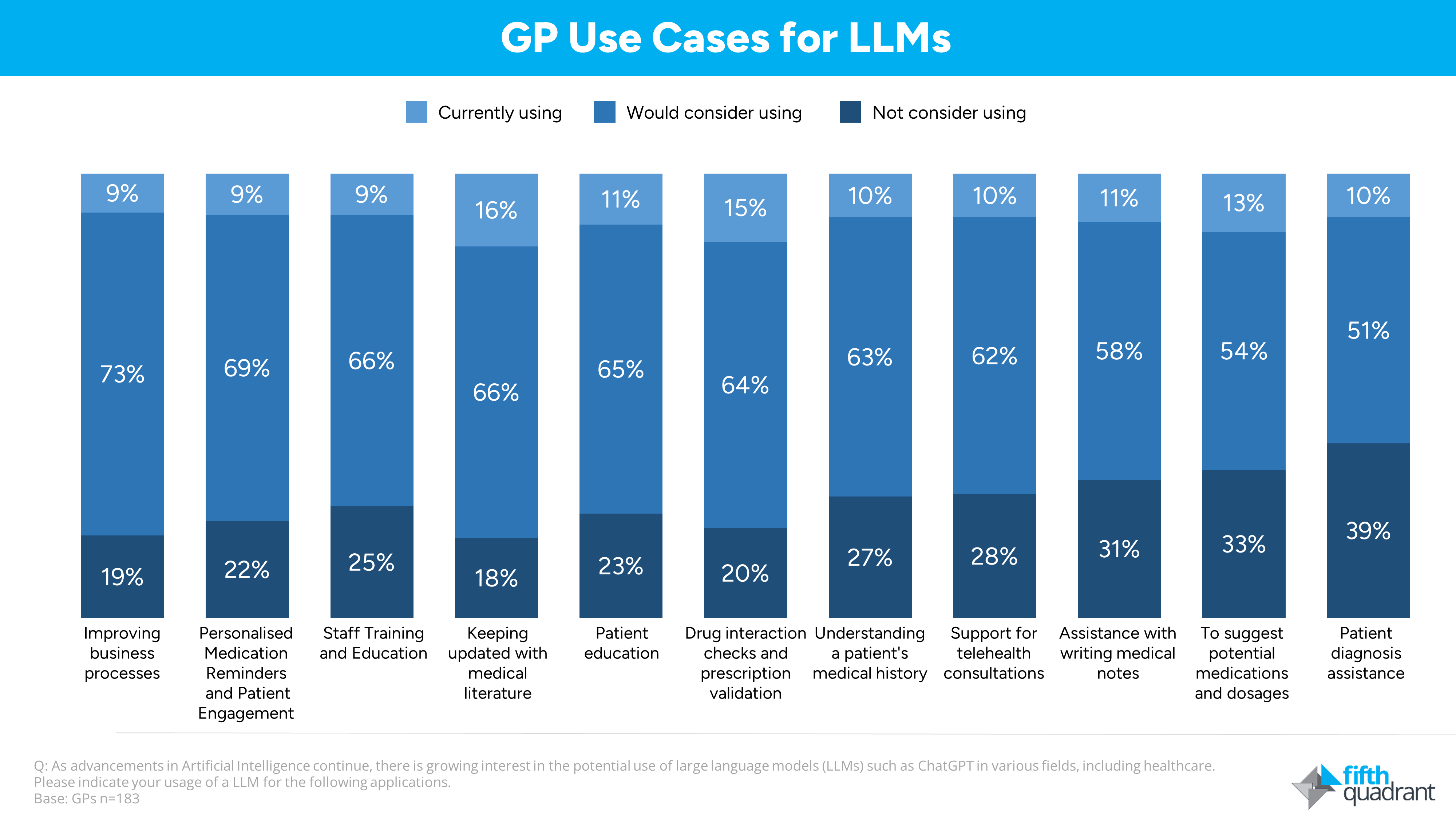

Many GPs are open to using LLMs to make better clinical and business decisions for the following use cases:

- To understand complex medical information in a way that is easy for GPs to understand and make informed decisions about patient care. 51% would consider using an LLM to help diagnose a patient, and 10% are already doing so. One in 10 have used an LLM to assist with writing medical notes and 58% are open to this somewhat controversial use case.

- To analyse patient data and generate a list of potential risks and benefits of different treatment options. This can help GPs choose the best treatment option for patients. 64% would consider using LLMs for drug interaction checks and prescription validation. 63% would consider using LLMs to understand a patient’s medical history and 54% would consider LLMs to suggest potential medications and dosages.

- To stay up-to-date with the latest medical research by scanning large volumes of medical literature to identify the most relevant research for a particular patient case. This can help GPs to make decisions that are based on the most up-to-date evidence. 66% would consider using LLMs to keep up to date with the latest research published in medical journals.

- To educate patients about diagnoses and treatment options and for staff training and education. 65% would use LLMs for patient education and 66% would use LLMs for staff training.

- To streamline workflows and improve business processes, e.g. handling appointment scheduling, reminders, and follow-ups, conducting initial patient pre-screening and providing responses to frequently asked questions about medical procedures and pre- or post-appointment instructions. 73% of GPs are open to using LLMs to improve business processes.

ethical issues with using LLMs in healthcare:

The Australian Medical Association (AMA) released its position statement on the application of AI and LLMs in healthcare in August 2023. The AMA states that:

“The development and implementation of AI technologies must be undertaken with appropriate consultation, transparency, accountability and regular, ongoing review to determine its clinical and social impact and ensure it continues to benefit, and not harm, patients, healthcare professionals and the wider community.”

Elaborating on this, the AMA states that the application of AI in healthcare must only occur with appropriate ethics oversight. Fifth Quadrant’s Responsible Artificial Intelligence Index provides a comprehensive assessment of ethical, responsible and trustworthy AI practices. In the context of a primary healthcare setting, our survey of GPs highlights the urgent need for policy and regulation about how to use LLMs responsibly.

Until such policies have been developed, GPs and general practices should use LLMs with caution. The following suggestions are offered about using LLMs in general practice:

- Be aware of the limitations of LLMs and do not rely on them solely for making clinical decisions.

- Use LLMs in conjunction with other resources. LLMs should be used in conjunction with other resources, such as medical databases, textbooks, and the advice of other healthcare professionals.

- Verify the accuracy of information generated by LLMs. For example, checking with other healthcare professionals or consulting medical databases and literature.

- Understand the medical data sets that have been used to train LLMs.

- Consider whether LLMs are subject to medical device regulation by the TGA.

conclusion

LLMs have the potential to revolutionize the way that GPs practice medicine. LLMs can help GPs to diagnose and treat patients more accurately and efficiently, to stay up-to-date with the latest medical research, to educate patients, and to automate administrative tasks.

However, it is important to be aware of the ethical issues with using LLMs in healthcare and to use them safely. GPs should be aware of the limitations of LLMs, use them in conjunction with other resources, and verify the accuracy of information generated by LLMs. The Fifth Quadrant team is highly experienced in conducting healthcare and technology market research. To learn more about our capabilities or if you have a brief to share, please get in touch

Posted in Healthcare, QN, Technology & Telco, TL