Author: Amelia McVeigh | Posted On: 15 Feb 2024

In today’s digital era, AI is a vital tool. But, like any new technology, knowing how to get the most out of it and where it falls short is crucial. As market researchers, we’re diving deep into AI, stress-testing the tools, and exploring their limits. As part of this process, we’ll be testing different myths around AI to see how the models perform. First challenge: Can AI beat humans when it comes to making predictions?

The Challenge

For this year’s Triple J Hottest 100, the Fifth Quadrant team were each asked to submit their predicted top 10 songs. Picking the top song would get you 100 points, number 2 – 99 points and so forth. A perfect score would give you a total point tally of 955. The challengers (humans) could use ANY source for their research, and had to lock in their picks before the deadline of Thursday 25th of January. We then asked Chat GPT and Bard (now Gemini) to ‘predict the top 10 for the 2024 Triple J Hottest 100’, entering their responses in the sweeps too.

The results

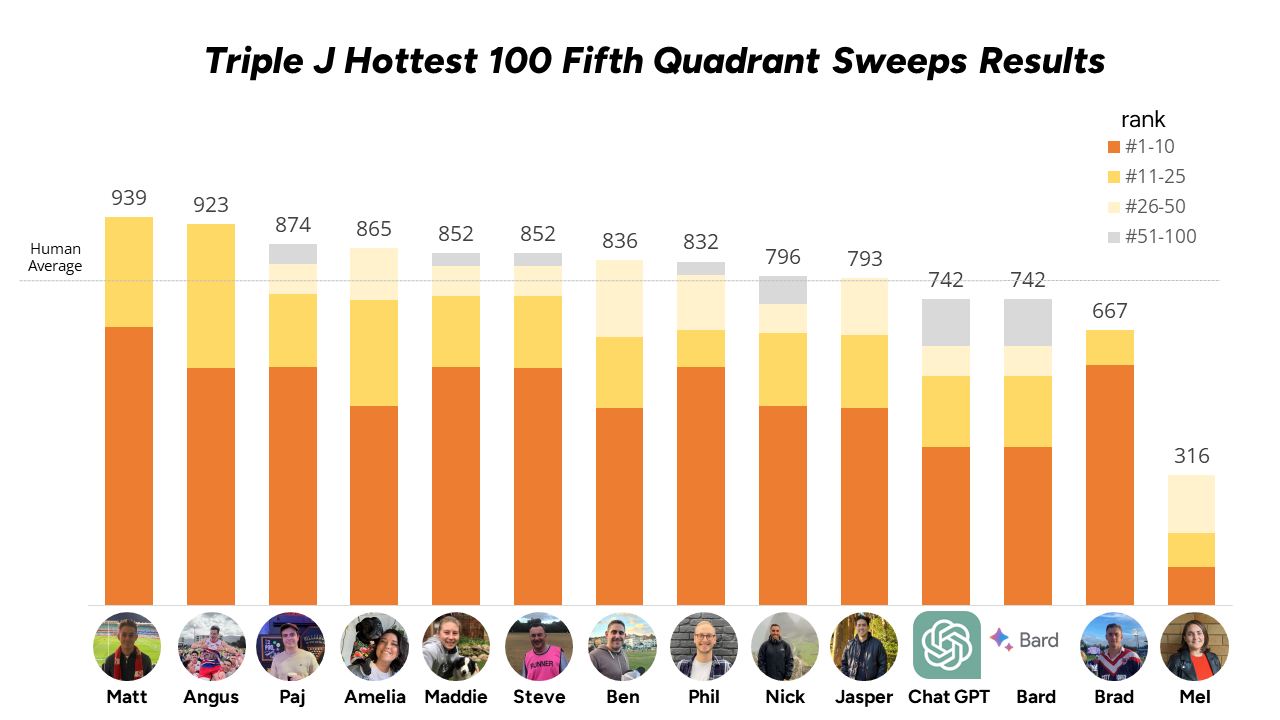

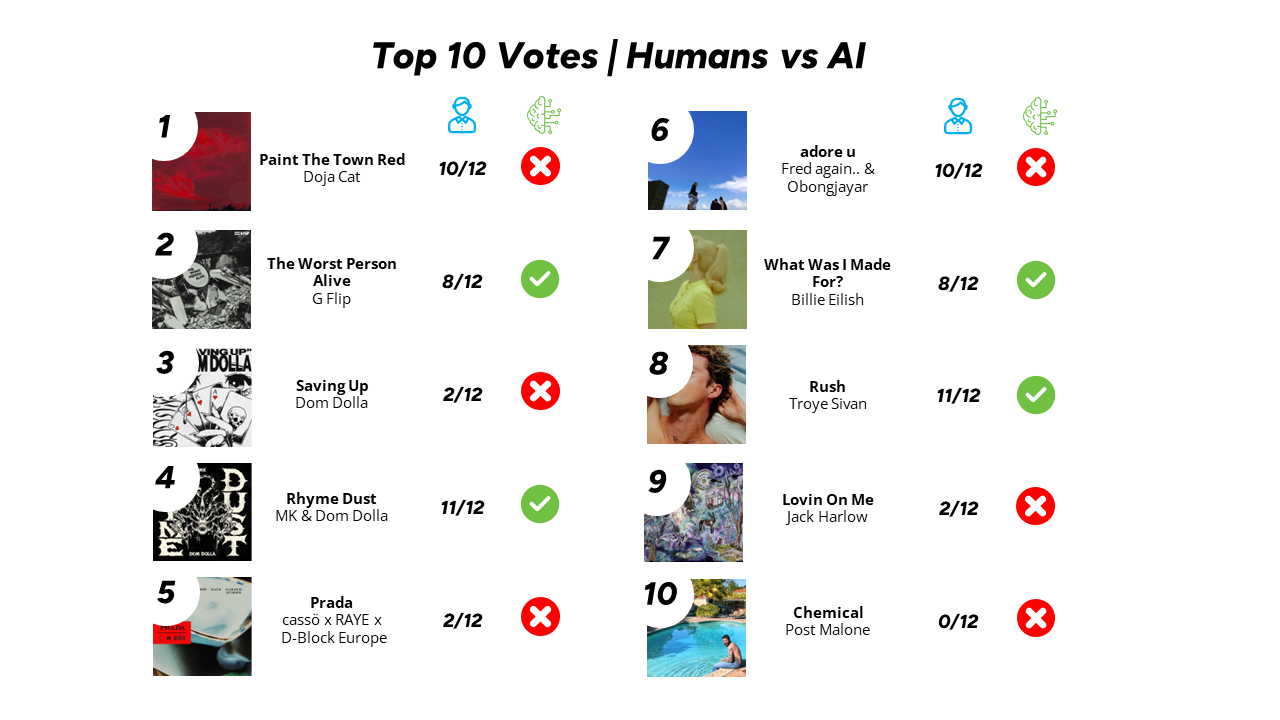

As can be seen below, the humans won pretty convincingly. Our winner Matt scored a total of 939, missing just three of the top 10 songs. In contrast, the AIs both submitted the same list, scoring 742, and ranking equal 11th. ChatGPT and Bard picked just four 4 songs in the top 10, completely missing the number 1, Paint the Town Red by Doja Cat.

highlights

- Humans outperformed the AI on all metrics:

- Average score: Human 795 vs. AI 742

- Songs picked: Human 5.5 vs. AI 4

- Share of Correct Songs: Human 81% vs. AI 60%

- No system is perfect: Neither humans nor the AIs guessed #10 Chemical – Post Malone

- Eight humans guessed Not Strong Enough – boygenius, which only made it to 30th in the rankings

Contrasting Approaches

Bard stated that it sourced its information from 100 Warm Tunas, a dedicated Triple J Hottest 100 prediction website, based on early voting trends. While it might have used additional sources, it is unclear how widely it cast the net. In contrast, the humans used different techniques; some voted with the heart, while others took a more data-centric approach, tapping into a range of sources, from news sites to bookies and of course also tapping into AI tools. The humans then distilled this information, applying their own intuition to build the perfect top 10.

what did we learn?

What does this tell us about the state of AI? The models are a great starting point, but their outputs are likely to need work to get to the best end product. While the humans emerged victorious in this particular contest, the performance of AI models like ChatGPT and Bard underscores the need for a nuanced understanding of AI’s capabilities and limitations.

AI models have access to impressive processing power and knowledge bases, but still struggle to match the intuitive leaps and emotional depth of human decision-making. The gap between human and AI predictions in the Hottest 100 highlights the importance of leveraging AI as a complementary tool, and not a replacement for human intuition and expertise.

As we continue to debunk myths and explore the frontiers of AI innovation, let us embrace the potential of human-AI synergy, forging a path towards a more insightful and informed future. Sign up for our newsletter to join us on our journey. Also remember that our b2b and consumer tracking research runs monthly. Click here to find out more, and feel free to get in touch if you’ve got questions that you’d like to answer.

Posted in TL, QN, Technology & Telco