Author: Dr Steve Nuttall | Posted On: 10 Dec 2025

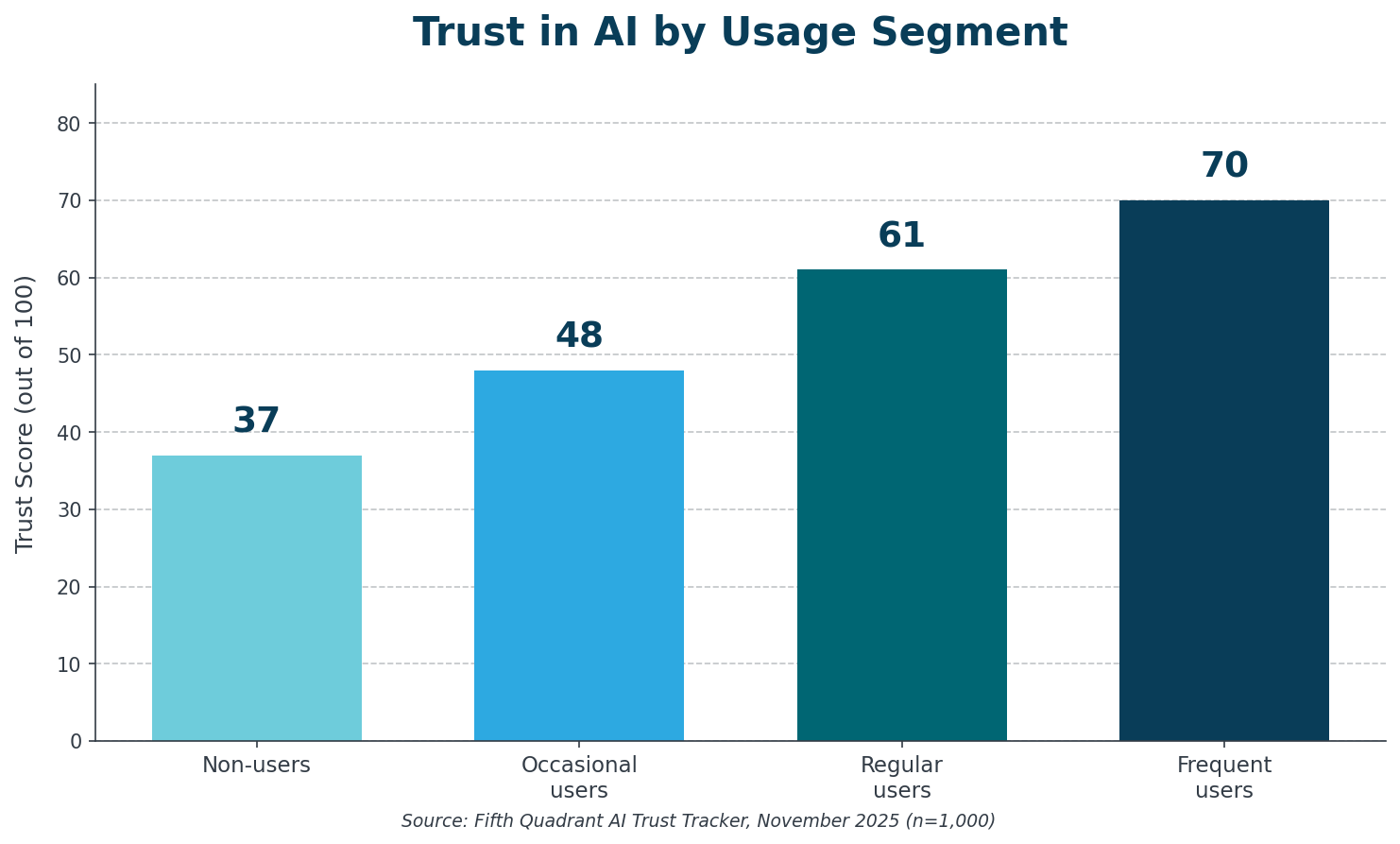

Over 13 million Australians are now using generative AI tools like ChatGPT, yet AI trust remains stubbornly low. Fifth Quadrant’s latest research, the AI Trust Index, reveals that whilst six in ten Australians have embraced generative AI, overall trust sits at just 51 out of 100. The Index exposes a striking paradox: Australians who have never used generative AI tools score trust at 37, whilst frequent GenAI users who use these tools daily score nearly double at 70. This trust gap between users and non-users of GenAI provides insight into how Australians develop confidence in artificial intelligence.

The findings, released alongside the Australian Government’s National AI Plan, arrive at a pivotal moment. With responsible AI practices now positioned at the centre of Australia’s AI strategy, understanding public trust has become critical for organisations deploying AI systems.

AI scepticism stems from unfamiliarity

Lack of trust does not stem from AI itself but from unfamiliarity with it. Our nationally representative survey of 1,000 Australians measured AI trust across ten everyday scenarios, from customer service chatbots to medical advice systems. The pattern held consistent: people who actively use AI demonstrate significantly higher trust than those who don’t.

This challenges the common narrative that low trust is holding back AI adoption. The evidence suggests the opposite. Adoption drives trust, not the other way around. The 40% of Australians who have never used generative AI are sceptical because they have never used it.

Context matters

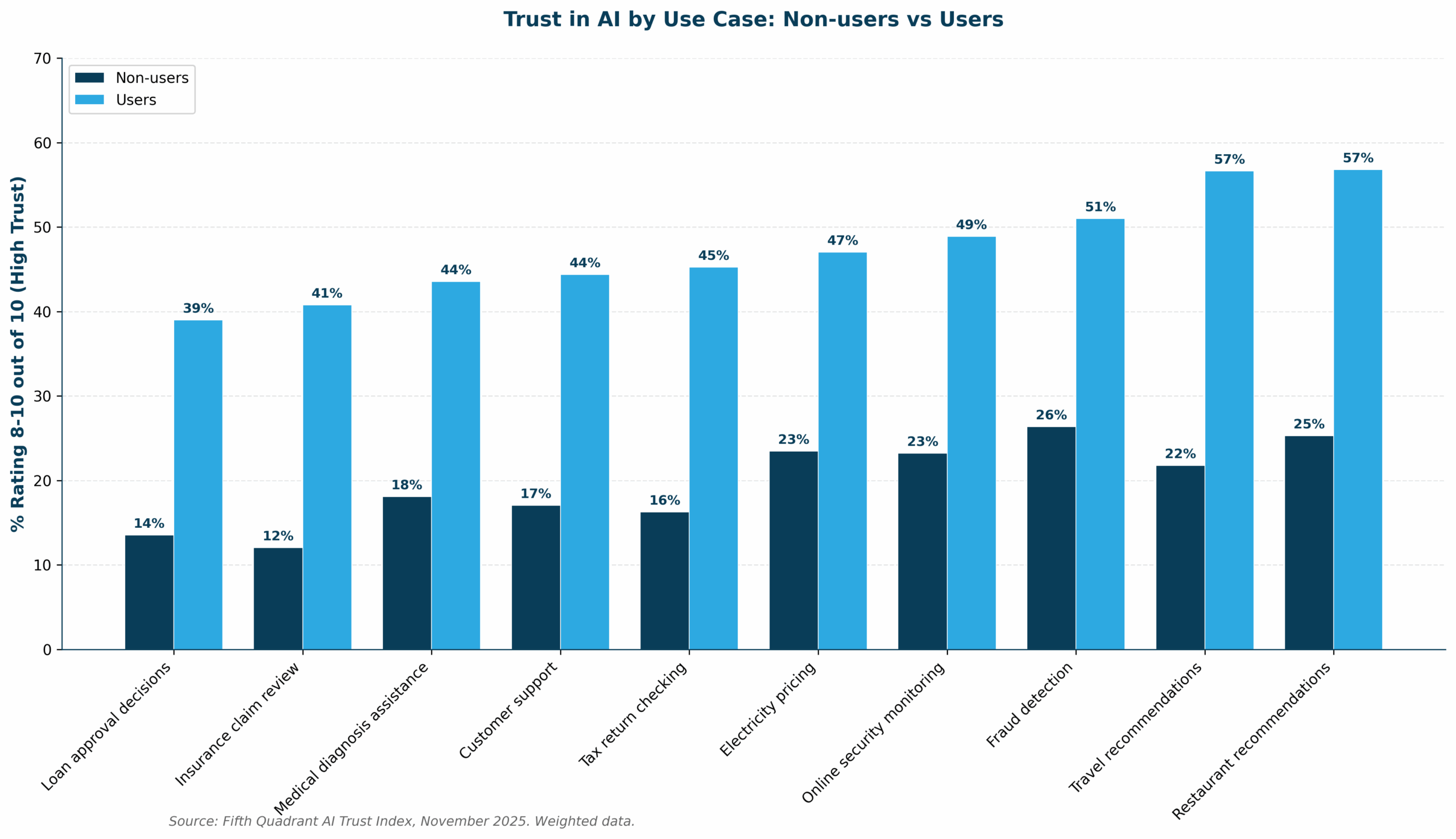

Crucially, this rising trust is not uniform across all applications. Australians are not rejecting AI wholesale; they are calibrating their confidence based on consequence.

Trust is not uniform across different AI applications.

- Low-Stakes Contexts: Australians show more comfort with AI in lifestyle contexts or when it provides a protection function such as spotting suspicious payments.

- High-Stakes Contexts: Trust is lower in applications involving personal health, medical advice, loans, or insurance claims. When AI misjudges a loan application or health diagnosis, the stakes are personal and significant.

Experience raises expectations

Once users gain experience, their trust in the AI’s functionality rises, but so does their scrutiny of the organisation deploying it.

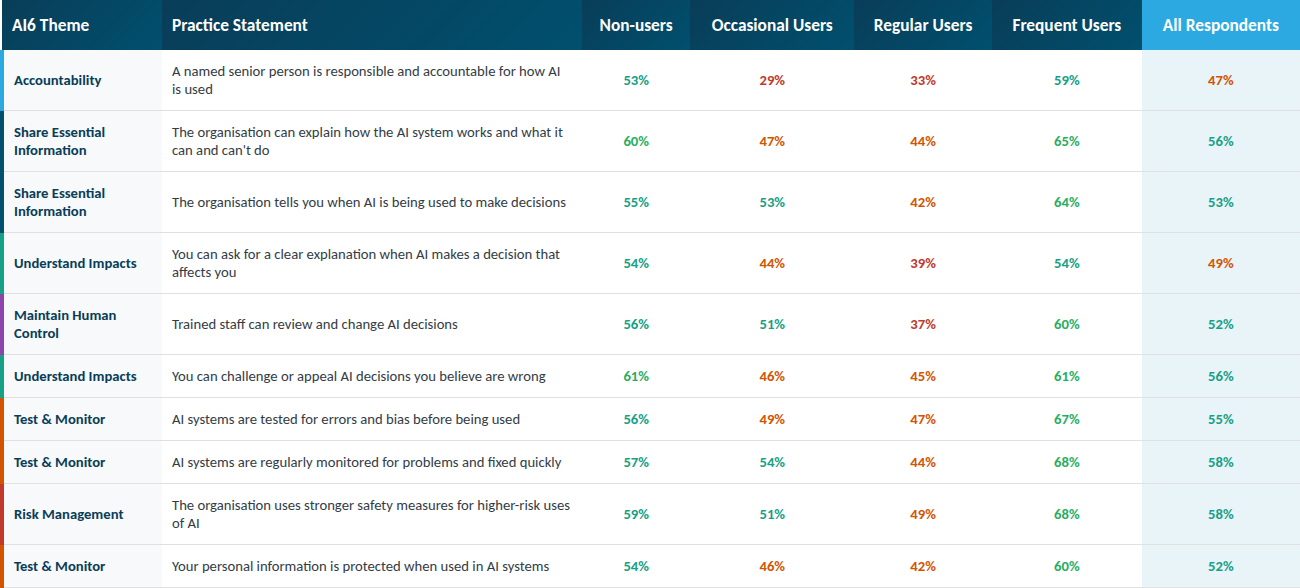

Experience breeds not blind faith, but calibrated confidence paired with heightened expectations for safety. These expectations align closely with the AI6 framework developed by the National AI Centre (NAIC), which identifies six essential practices for responsible AI governance: Accountability, Transparency (Share Essential Information), Human Control, Testing and Monitoring, Risk Management, and Stakeholder Impact (Understand Impacts). The table below maps consumer expectations to these AI6 themes, revealing how different user segments prioritise each practice.

Frequent AI users hold organisations to materially higher standards around responsible AI practices. These are informed users who demand accountability, transparency, and robust testing.

Expectations of Responsible AI Practices (% definitely expect this)

For organisations, this means meeting the elevated expectations of your most AI-literate customers becomes non-negotiable. Users want proof that errors are caught, bias is managed, and decision-making processes are clear.

What this means for organisations

The trust gap carries two clear implications for organisations seeking to align with the Australian Government’s AI strategy:

- Stop Waiting for Trust and Build Familiarity: You cannot wait for trust to materialise before deploying AI. Trust develops through exposure and experience, not through marketing or reassurance campaigns. The path forward involves managed introduction that respects user boundaries, starting with applications where consequences are manageable.

- Meet the Higher Bar (Demonstrate Governance): For experienced users, you must demonstrate a genuine commitment to accountability, transparency, and robust governance. This means showing evidence of:

- Rigorous Testing & Monitoring: AI systems are tested for errors and bias before being used.

- Clear Accountability: A named senior person is responsible for how the AI is used.

- Human Control: Staff are trained and authorised to review and change AI decisions.

The data shows Australians are ready to trust AI when they understand it. The final question is whether organisations are ready to meet the heightened expectations for safety that familiarity brings.

At Fifth Quadrant, we bring deep expertise in technology adoption and consumer behaviour research. Our Responsible AI Self-Assessment Tool, complements the AI6 practices by helping Australian organisations evaluate their RAI maturity. Our AI Trust Tracker provides ongoing measurement of Australian attitudes towards AI, helping organisations understand how trust evolves as deployment increases. Explore our latest technology insights or get in touch to discuss your research needs.

Posted in Technology & Telco, B2B, TL, Uncategorized